We evaluate the mixing of the Gibbs sampling on wavelet coefficients by running ![]() parallel chains that sample from the fitted sparse FRAME model p(I; B, λ), where B={Bi, i=1,...,n} are the selected wavelets. These chains start from independent white noise images. Let

parallel chains that sample from the fitted sparse FRAME model p(I; B, λ), where B={Bi, i=1,...,n} are the selected wavelets. These chains start from independent white noise images. Let ![]() be the image of chain m produced at iteration t, where each iteration is a sweep of the Gibbs sampler with random scan. Let rm,t,i =<

be the image of chain m produced at iteration t, where each iteration is a sweep of the Gibbs sampler with random scan. Let rm,t,i =<![]() , Bi> be the response of the synthesized image

, Bi> be the response of the synthesized image ![]() to Bi. Let Rt,i=( rm,t,i, m=1,...,

to Bi. Let Rt,i=( rm,t,i, m=1,...,![]() ) be the

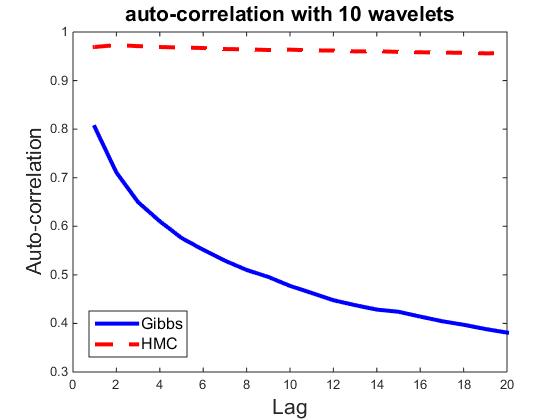

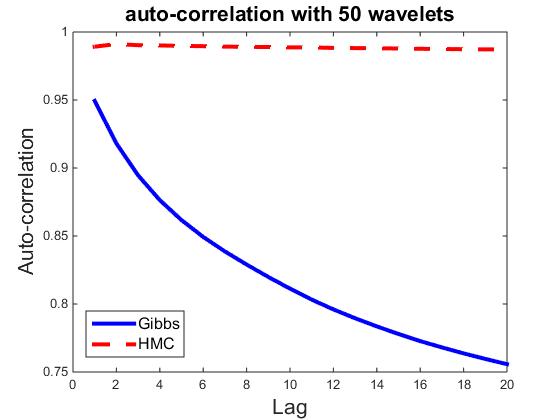

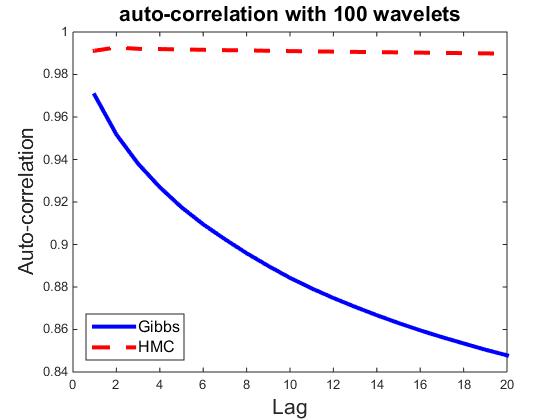

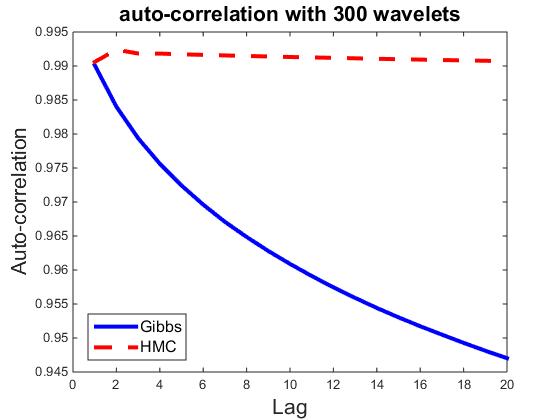

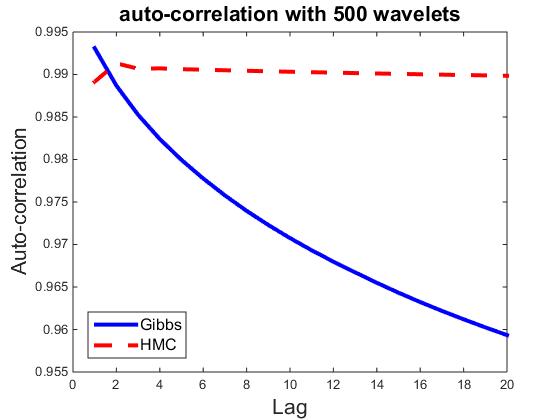

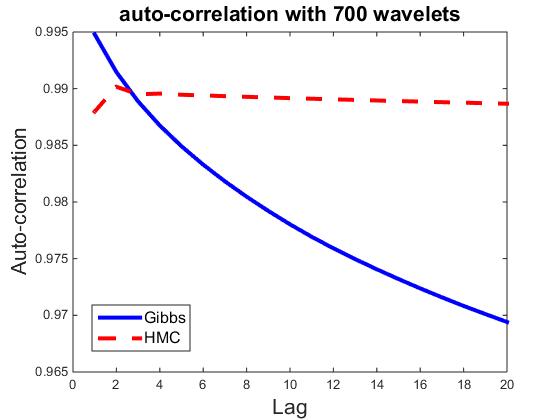

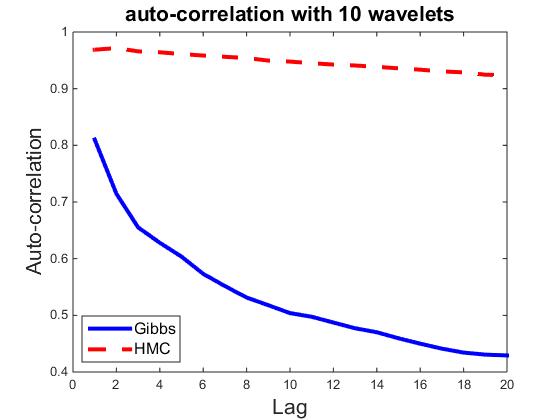

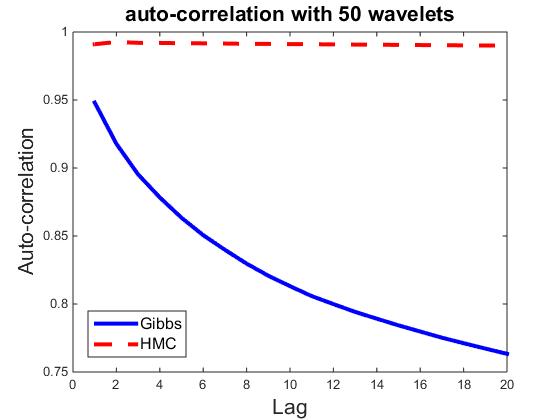

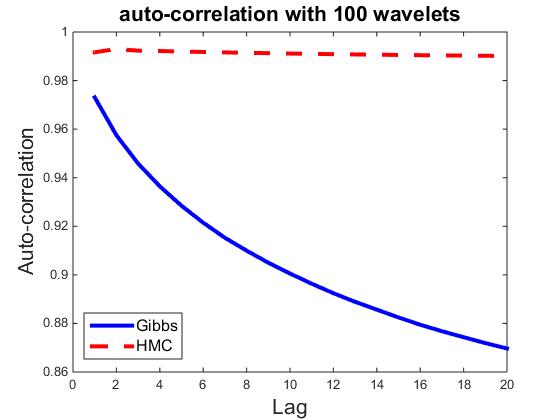

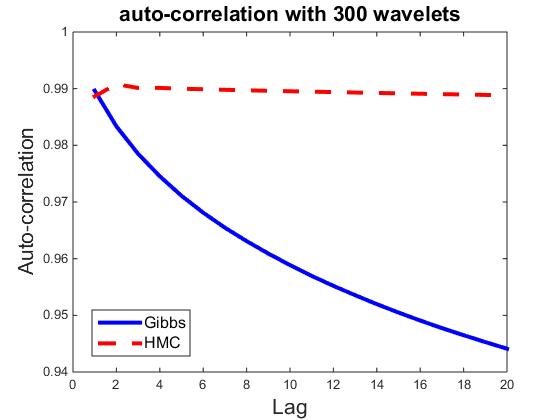

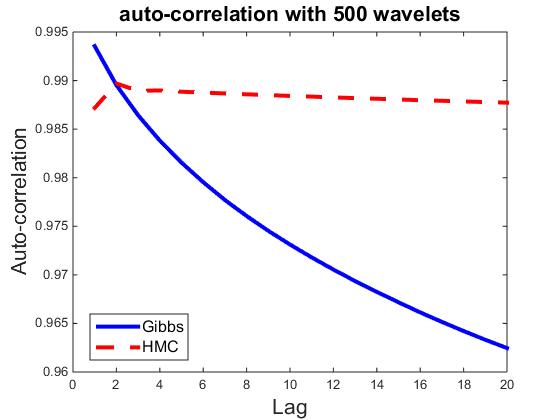

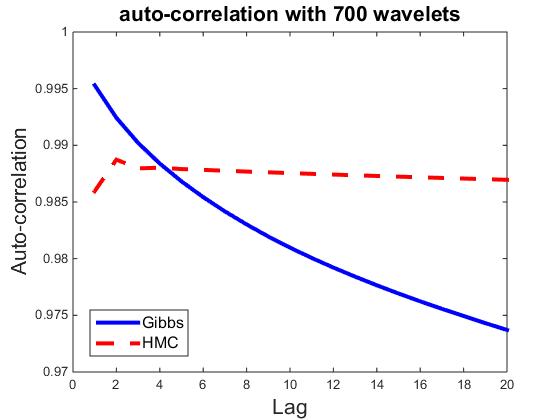

) be the ![]() dimensional vector. Fix t = 100, let ρk,i be the correlation between vectors Rt,i and Rt+k,i Then ρk=∑ni=1 ρk,i/n measures the average auto-correlation of lag k, and is an indicator of how well the parallel chains are mixing. As a comparison, we also compute ρk for HMC sampler, where each iteration consists of 30 leapfrog steps. Figures plot ρk for k = 1,...,20, and for n = 10, 50, 100, 300, 500, and 700, where each plot corresponds a particular n. It seems that HMC can be easily trapped in the local modes, whereas the Gibbs sampler is less prone to local modes.

dimensional vector. Fix t = 100, let ρk,i be the correlation between vectors Rt,i and Rt+k,i Then ρk=∑ni=1 ρk,i/n measures the average auto-correlation of lag k, and is an indicator of how well the parallel chains are mixing. As a comparison, we also compute ρk for HMC sampler, where each iteration consists of 30 leapfrog steps. Figures plot ρk for k = 1,...,20, and for n = 10, 50, 100, 300, 500, and 700, where each plot corresponds a particular n. It seems that HMC can be easily trapped in the local modes, whereas the Gibbs sampler is less prone to local modes.