Inferring Shared Attention in Social Scene Videos

Lifeng Fan⚹ 1, Yixin Chen⚹ 1, Ping Wei2,1, Wenguan Wang3,1 and Song-Chun Zhu1

Center for Vision, Cognition, Learning, and Autonomy, UCLA1

Beijing Institute of Technology3

(⚹ indicates equal contribution.)

Abstract

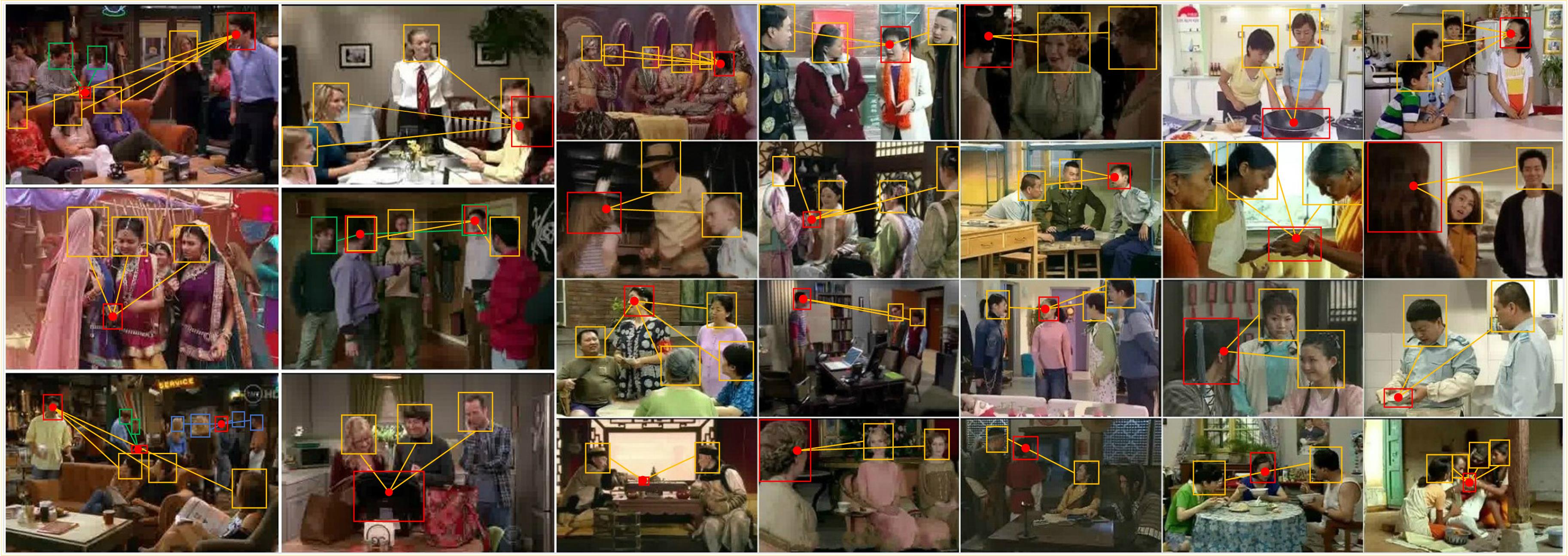

This paper addresses a new problem of inferring shared attention in third-person social scene videos. Shared attention is a phenomenon that two or more individuals simultaneously look at a common target in social scenes. Perceiving and identifying shared attention in videos plays crucial roles in social activities and social scene understanding. We propose a spatial-temporal neural network to detect shared attention intervals in videos and predict shared attention locations in frames. In each video frame, human gaze directions and potential target boxes are two key features for spatially detecting shared attention in the social scene. In temporal domain, a convolutional Long Short-Term Memory network utilizes the temporal continuity and transition constraints to optimize the predicted shared attention heatmap. We collect a new dataset VideoCoAtt from public TV show videos, containing 380 complex video sequences with more than 492,000 frames that include diverse social scenes for shared attention study. Experiments on this dataset show that our model can effectively infer shared attention in videos. We also empirically verify the effectiveness of different components in our model.