Unsupervised Learning of Dictionaries of Hierarchical Compositional Models

Jifeng Dai1,4, Yi Hong2, Wenze Hu3, Song-Chun Zhu4 and Ying Nian Wu4

1 Tsinghua University, China 2 WalmartLab, USA 3 Google Inc, USA 4 University of California, Los Angeles (UCLA), USA

Introduction

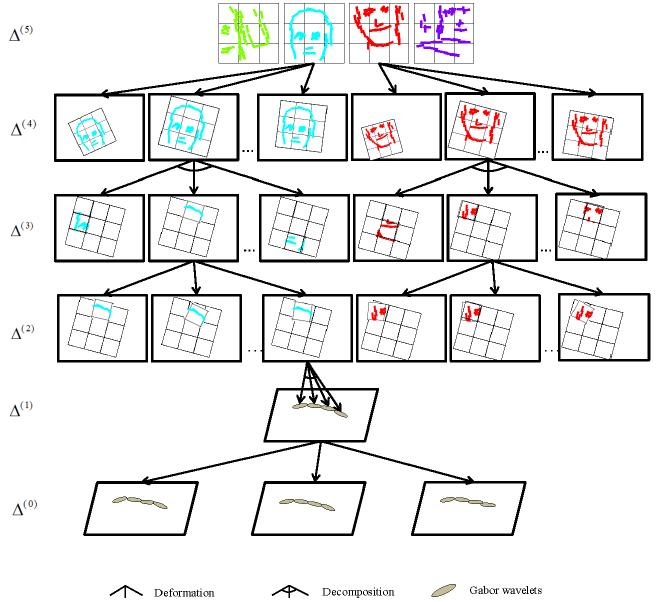

This paper proposes an unsupervised method for learning dictionaries of hierarchical compositional models for representing natural images. Each model is in the form of a template that consists of a small group of part templates that are allowed to shift their locations and orientations relative to each other, and each part template is in turn a composition of Gabor wavelets that are also allowed to shift their locations and orientations relative to each other. Given a set of unannotated training images, a dictionary of such hierarchical templates are learned so that each training image can be represented by a small number of templates that are spatially translated, rotated and scaled versions of the templates in the learned dictionary. The learning algorithm iterates between the following two steps: (1) Image encoding by a template matching pursuit process that involves a bottom-up template matching sub-process and a top-down template localization sub-process. (2) Dictionary re-learning by a shared matching pursuit process. Experimental results show that the proposed approach is capable of learning meaningful templates, and the learned templates are useful for tasks such as domain adaption and image cosegmentation.

Paper

The paper can be downloaded from here. The latex source file can be downloaded from here.

Code and Data

The code and data can be downloaded from here.

Contact us

For comments or questions about the algorithm please email daijifeng001 -at- gmail.com