Generative Modeling of Convolutional Neural Networks

Jifeng Dai1, Yang Lu 2, and Ying Nian Wu2

1 Microsoft Research, Asia 2 University of California, Los Angeles (UCLA), USA

Introduction

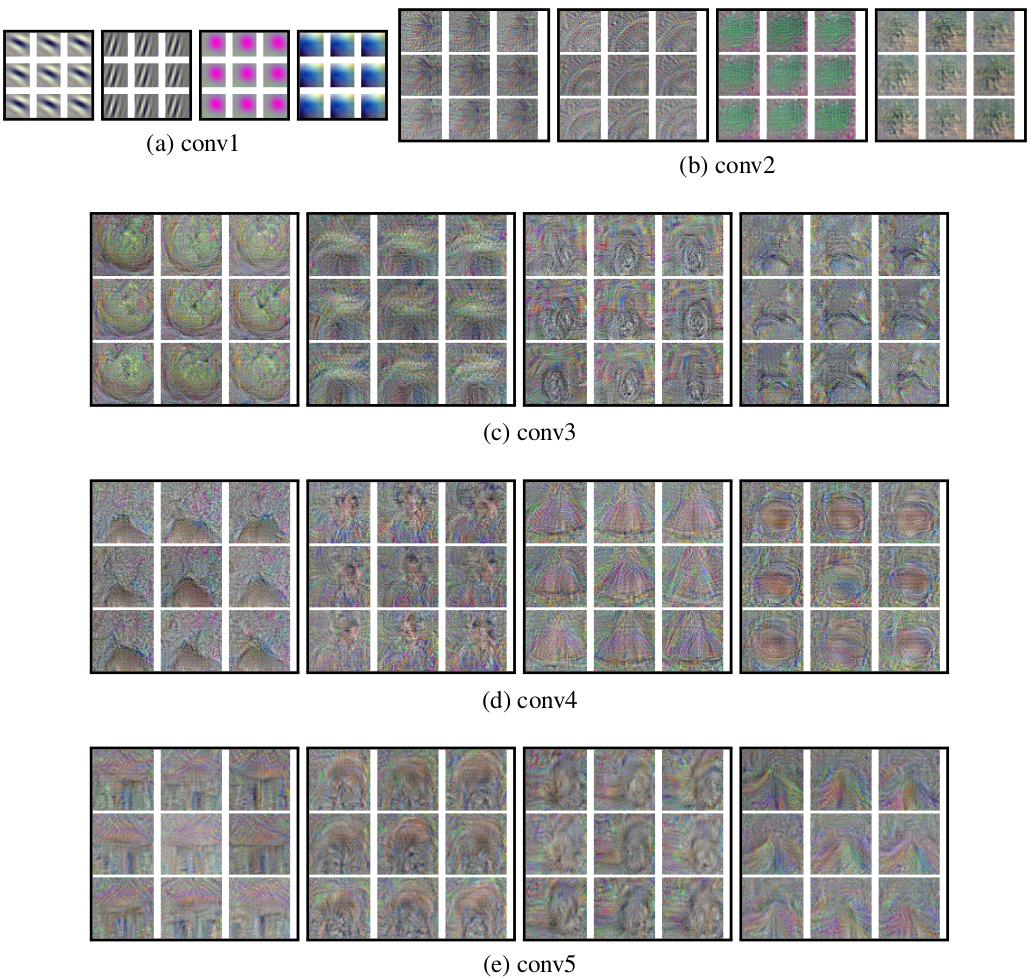

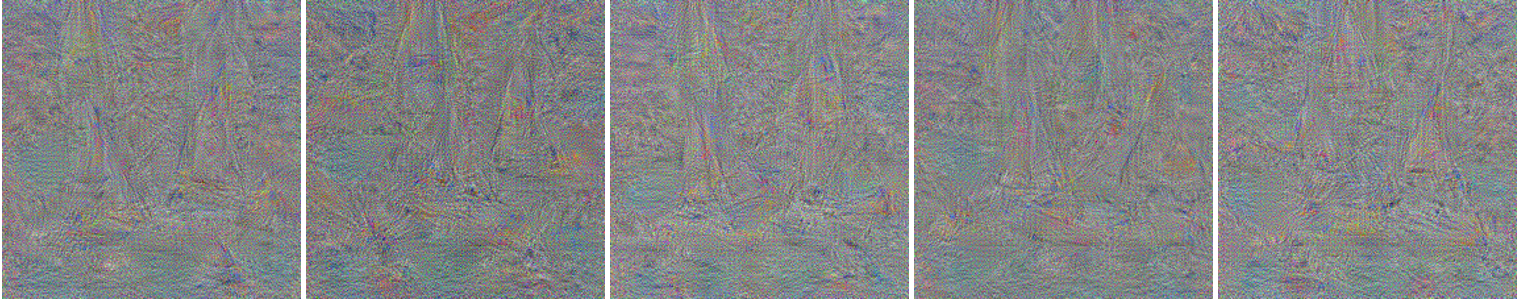

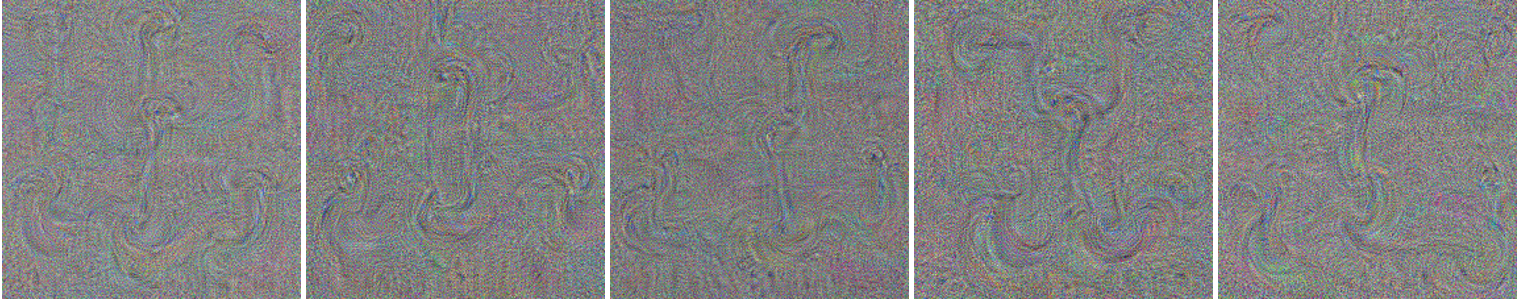

This paper investigates generative modeling of the convolutional neural networks (CNNs). The main contributions include: (1) We construct a generative model for CNNs in the form of exponential tilting of a reference distribution. (2) We propose a generative gradient for pre-training CNNs by a non-parametric importance sampling scheme, which is fundamentally different from the commonly used discriminative gradient, and yet has the same computational architecture and cost as the latter. (3) We propose a generative visualization method for the CNNs by sampling from an explicit parametric image distribution. The proposed visualization method can directly draw synthetic samples for any given node in a trained CNN by the Hamiltonian Monte Carlo (HMC) algorithm, without resorting to any extra hold-out images. Experiments on the ImageNet benchmark show that the proposed generative gradient pre-training helps improve the performances of CNNs, and the proposed generative visualization method generates meaningful and varied samples of synthetic images from a large and deep CNN.

Paper and poster

Materials can be downloaded from Paper | Tex | Poster.

@inproceedings{Dai2015ICLR,

author = {Dai, Jifeng and Lu, Yang and Wu, Ying Nian},

title = {Generative Modeling of Convolutional Neural Networks},

booktitle = {ICLR},

year = {2015}

}

Code and Data

The code can be downloaded from here.

Sythesized Images

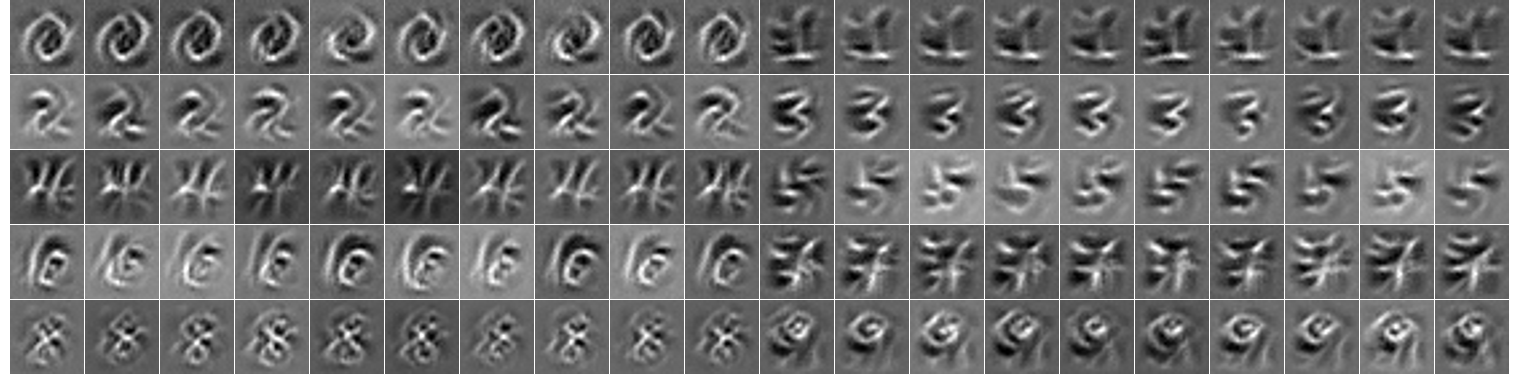

MNIST

Intermediate Layers

Boat

Claw

Goose

Hen

HorseCart

HourGlass

Knot

Lotion

Mower

Nail

Orange

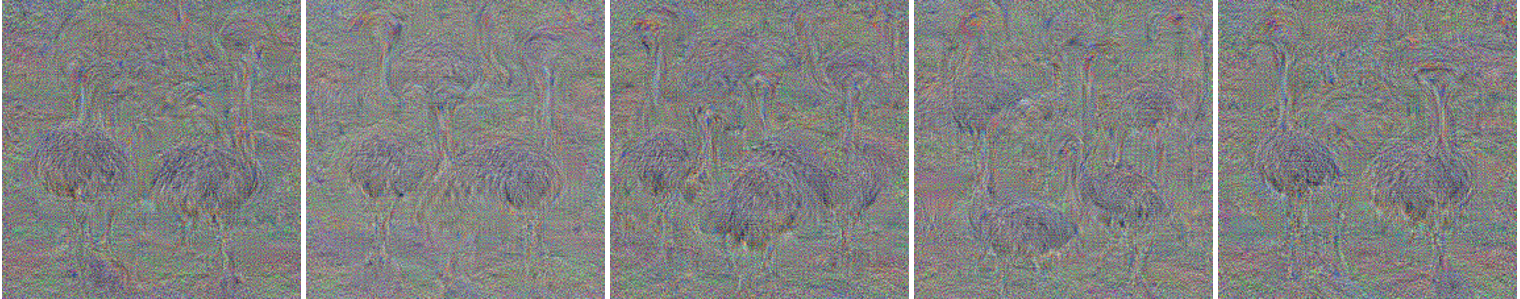

Ostrich

Panda

Peacock

Robes for Graduation

Star

Tench fish

Sythesized Images by Langevin Dynamics

Claw

Goose

Hen

HorseCart

HourGlass

Lotion

Mover

Orange

Ostrich

Panda

Star

Tench fish